When Tempero first started out 10 years ago, it was focused solely on the moderation side of what now makes up part of our wider social media management services.

It’s definitely the less ‘cool’ side of social media management, but a very important side nonetheless, and not just in terms of brand protection, but user protection too.

Before breaking away from Granada Media and starting Tempero with Dom Sparkes, I worked for a number of years as a child counselor working on the front-line with children who had been abused. This experience left a lasting impression on me, and when I stepped into the world of online communities I could immediately see that there was a need for comprehensive guidelines and policies when it came to managing children’s user-generated content. Even working on TV-based communities such as Coronation Street and Emmerdale (14 years ago) I could see some of the key dangers of creating social spaces where children and young people were communicating with adults, especially when some of these online fan clubs met in real local areas.

One of Tempero’s first major client wins was with the BBC back in 2005 and as part of that we started working on various CBBC projects, where child-safety really was the main priority. Not long after came Disney, NSPCC and Aardman. From the very start we have put child safety and stringent policies at the forefront of everything we do, ensuring the tricky web of fragmented information a child could unwittingly give away, isn’t easily pieced together by anyone wishing to do so. Areas such as identifiable signs in a photo/video that include school/club names, street signs, etc., whether a child is carrying a bag with an easily identifiable school emblem or their full name on it; whether the child names a regular club/pool/park that they go to or another social network site they’re part of are just a few areas that are covered in the moderation guidelines we create for our clients.

We understand that as well as keeping children safe, it’s important to give them a vibrant, engaging space in which they can interact. The ‘safety’ part generally points towards pre-moderation (content does not go live to the website until it has been reviewed by a moderator), but to ensure a quick turnaround time on content to keep that sense of vibrancy can be costly. We strongly believe that if you’re going to build a space for children to interact online, that factoring in the budgets to produce a safe and robust moderation process – and that means using human beings to make a judgment on the content, not just technology – should be the main focus of any project planning. We’ve recently seen, thanks to Habbo, what can happen when these processes aren’t in place, so if you find yourself in the enviable position of owning a successful children’s online community, then it goes without saying that it’s your responsibility to ensure your users are kept safe.

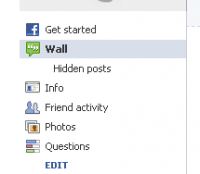

We’d like to see all brands that build pages on sites such as Facebook, or their own social platforms have child safety at the forefront when making decisions on how to manage and interact with their users, fans and followers. And by ‘all brands’ we don’t just mean ones that have children as their sole focus. One report earlier this year suggested that 38% of all young people on Facebook are under the age of 13; and these children are likely at some point, to be interacting with your brand.

Tempero will be hosting a free event on Tuesday 25th September as part of Social Media Week London to discuss this topic further. We’ve got some great speakers joining us, from ChildLine, Aardman and BBC, all hosted by John Carr. Please register here for a place.

[image: ]

Pingback: Protecting Children Online: Best Practice Guidance | Tempero