Simon Fuller from E-Commerce Law & Policy spoke to Tom Walker, our Community Manager and child-safety expert last month about the challenges of moderating content in order to protect children online.

What kind of content does your work involve moderating – have you seen any trends in terms of the kind of content that is particularly prevalent?

I find that the content is quite wide in scope depending on the focus of the particular project. I work with ChildLine who provide a space where young people can talk about the issues that affect them so the content is very much about their experiences. At BBC Bitesize, the content is more about revision and learning. I also work with The Tate Movie Project, a community designed to inspire young people to learn about, and get involved with, animation. The content here is more creative and can be in [multiple] formats.

There are two [key] trends: young people habitually use the internet to socialise, even when discouraged to do so by the website. The longer a conversation continues the more likely it is that they will attempt to exchange contact information. For projects of a sensitive nature it is essential that anonymity is preserved to protect members. Secondly, young people use the internet in a creative way. They often find a way to use the platform in unexpected ways and they revel in the knowledge that someone is listening.

What can operators of online communities and websites do to protect users and prevent the appearance of inappropriate content?

Moderation is crucial for any online community involving children and young people. If possible, content [should] not go live until checked by a trained moderator. There are tools that can help by catching inappropriate words or personal information but young people are adept at personalising language to evade filters and there really is no substitute for a human being when it comes to analysing language. Clear procedures for escalation in the case of unsuitable content are a must too. Time can be critical in many safeguarding situations. Finally, a strong registration process is also a good idea. Pre-checking user profiles and requiring a parental email address helps to ensure the members’ safety.

What are the common mistakes brands or social media websites make in dealing with such content?

Many platforms have excellent reporting functionality and sometimes, very good content management systems, but do not commit enough resources to ensuring that a trained moderation team are available to review the content. The most frequent complaint about social networking that I see from young people is that their report was not acted upon.

How savvy are individuals becoming in their efforts to circumvent content moderation? What are we seeing and is this kind of behaviour becoming more common?

I wouldn’t say that attempts to circumvent site rules are becoming more common. There have always been people looking to disrupt or offend and, where young people are concerned, you sometimes see comments that raise deeper concerns about the poster’s intentions.

We do see some very inventive attempts to disguise inappropriate content though. Many an unsuspecting moderator has clicked on a link and been shocked at what lurked behind it. We’ve seen complex systems of code designed to hide personal information or convey offensive messages. The way young people speak and the memes they laugh at constantly evolve, so our team spend a lot of time researching to make sure that they catch things that a filter might miss.

Recent news about inappropriate activity by users on the Habbo Hotel virtual world has no doubt heightened concerns about what children can encounter online – what needs to be done to prevent a repeat of the problem at Habbo?

The owners of large, online environments need to think carefully about the design of the communities they operate and to take more responsibility for the content within them. Strong reporting processes, swift action on reports and a well trained moderation team are essential. There is a general assumption that education can help to protect members of the community but this overestimates the appetite young people have for reading guidelines, terms and conditions and underestimates the levels of deliberate risk-taking behaviour that young people engage in. It’s not enough to feel that because they have put some safety information out there, they’ve done enough to protect the visitors to their community. We wouldn’t accept this approach from a youth club, so we shouldn’t expect it online.

Given the potential of children interacting with adults through such social networking sites, what protective steps can be taken? Should Facebook rethink its age verification process?

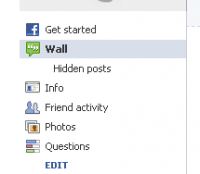

Young people will always find ways to seek out content aimed at those older than them. With a community the size of Facebook, the key is strong reporting processes backed up by a team large enough to deal with the reports that come in. There are signs that Facebook is rethinking its reporting process for younger users of the site to make the process easier. I particularly liked the idea it floated at its Facebook Compassion Research day of allowing 13-14 year olds to share troubling content with a trusted adult and to seek an adult’s advice, but this would be dependent on members identifying themselves as 13 or 14.